Some companies are starting to use AI in their external reporting, including preparing financial statements. But there is significant risk of AI introducing errors and falsehoods into the statements. And having AI hallucinate phony numbers in a regulatory filing is a good way to end up taking a perp walk. So what’s a CFO to do? As we’ll explore today, CFOs need to prioritize AI governance in external reporting. Here’s how.

Any day now. There’s no question that AI offers real value in the external reporting process, but there are significant risks.

In the loop. AI use in financial statements and other disclosures require a “human in the loop” to prevent and catch hallucinations.

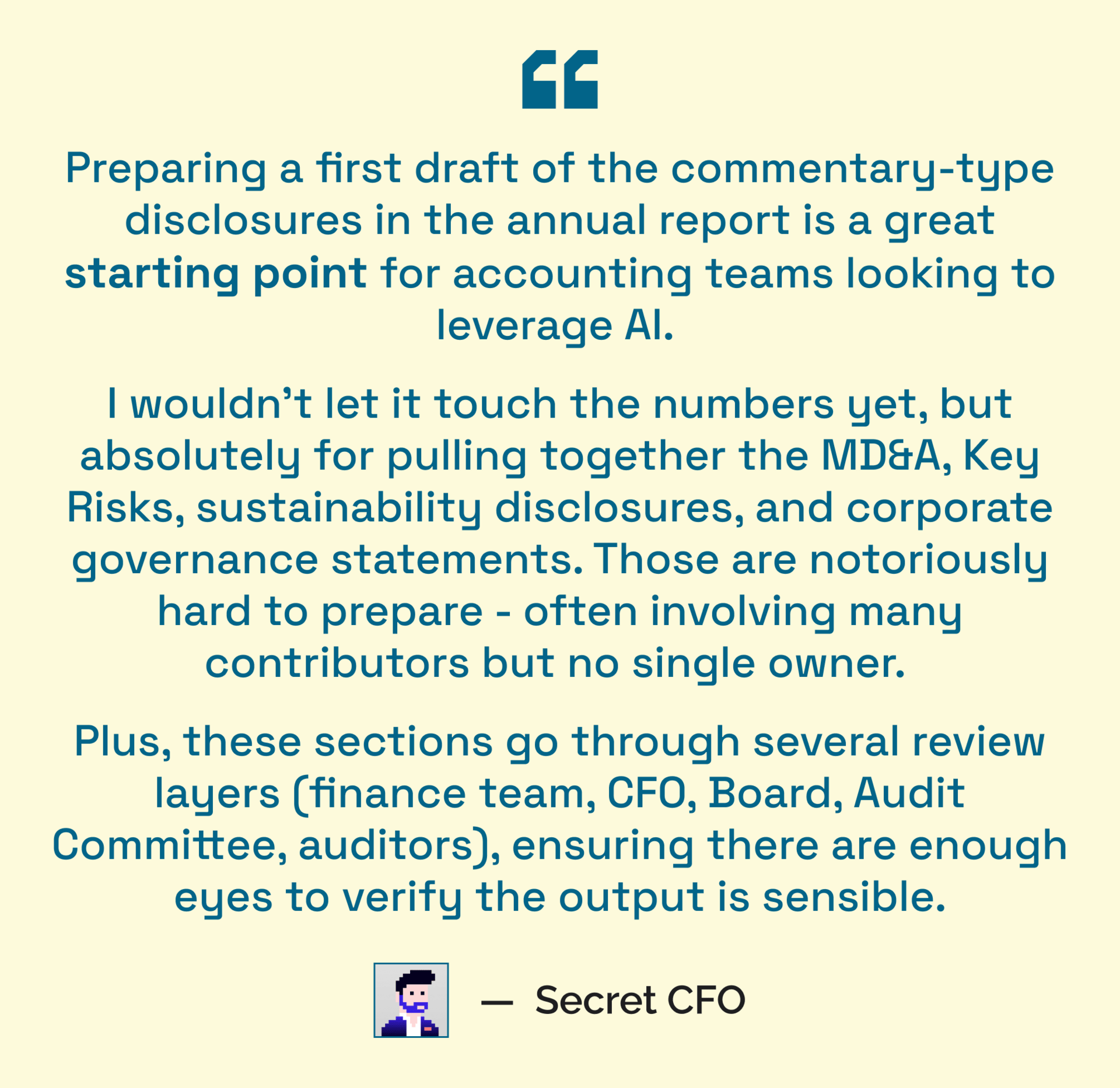

Safety net. Annual reports could be a good place to test AI in the CFO’s domain - as few other finance workflows have so many review layers, by default.

—

Read time: 9 minutes 52 seconds

⧗ Written by Katishi Maake and Secret CFO

A WORD FROM THIS WEEK’S SPONSOR...

ERP implementations should be measured in weeks, not years

Long, messy rollouts and vague timelines derail momentum. Let's rewrite the ERP story.

Campfire customers are going live in weeks, thanks to a guided implementation that’s predictable, transparent, and manageable for real teams with real workloads.

Hewlett Packard and ON Semiconductor, both with market caps in the tens of billions, recently told the Wall Street Journal that they are starting to use AI to write MD&As, gather basic numbers for the balance sheet, and write first drafts of their financial statements.

For Hewlett Packard, first drafts are just the beginning of AI’s role in their financial reporting. HP CFO Marie Myers told the Wall Street Journal that the company hopes to “have both the financial and non-financial parts of the company’s SEC filings written by AI at some point,” with final human oversight.

So, is that a good idea? Yes or no, these moves beg the question: what role, if any, should AI play in regulatory reporting and disclosure? Are activities like financial reporting, climate disclosures, and regulatory compliance ready for AI, but should organizations risk compliance on financial statements or other regulatorily-mandated disclosures by turning them over to AI? Or is financial reporting- with its deep layers of review attention, cross checking, and audits - the exact right place to start with AI preparing the first draft before it gets handed back to the humans for further scrutiny?

“How do you use AI responsibly, use it ethically?” said Deepa Rao, the AI Governance & Sustainability Leader for IT consulting firm Cognizant. “What are the judgment parameters so that the ultimate aim is to make sure that the stakeholders have full trust in what you are reporting using AI?”

But if using AI for disclosures and financial reporting requires extra vigilance and oversight, what does that look like in practice?

First draft

Rao said that Cognizant is using AI across its external reporting - gathering data, analyzing it, and drafting narratives - along with other disclosures.

There is a use case for AI in external and financial reporting. Items like financial statements and sustainability disclosures require parsing vast sources of information from across the organization, involving numerous functions and offices.

Then there are writing and proofing the legally-required, dense narrative statements and disclaimers that cover well beyond the financials. Plus, there’s the time and energy required to coordinate all of that work.

Doesn’t it make sense to turn all that over to an AI that can quickly consume that disparate material and spit out a cohesive, (and time-saving) narrative?

There’s real value in getting to that first draft quickly; parsing quality information from across the organization into a single narrative is hard work and takes a long time. Having an AI get you there quickly, even as a starting point, offers a lot of benefits.

For Rao, AI is particularly useful for improving human-written first drafts of sustainability reports, regulatory disclosures, and repetitive narrative sections, but still requires intensive human oversight.

“When the AI writes, it sounds amazing, but there is so much in there that should not be taken literally,” she said. “The CEO or the CFO has to make sure that whatever the AI has written makes sense, because even one word can make a big difference.”

Making it right

To help catch potential errors and manage AI-quality risk, Deepa developed something she calls an “AI Governance Board-Level Framework.” While focused on sustainability reporting at Cognizant, Rao says that her guidelines on AI governance can be “very universal from an external reporting perspective when using AI.”

Rao recommends these guidelines for external reporting that she uses in shaping Cognizant’s AI use in reporting:

Come together. Rao established an AI governance team before reporting that includes IT, finance, and vendor representatives to provide proper controls and testing of AI models. For example, at Cognizant when writing a Systems and Organizations Control (SOC) report, there are multiple teams involved and they all need to agree, in advance, on AI internal controls across different processes and stages of the reporting process to prevent clashes or confusion.

Rao suggests teams should also establish guidelines for how much AI-generated content can be used in external reporting without human review. And they should develop a framework for documenting and tracing where AI was used in decision-making processes, so the board and auditors can understand the extent of AI reliance in external reporting.“There are too many blind spots when you use AI without letting the board know where AI was used,” Rao said. “When it comes to the whole report, it's such a mishmash of so many AI models put together. Most of them don't have governance and the board does not know where you've hit and where you've missed because AI was used and it is so difficult to untie that knot.”

First things first. Cognizant requires parallel runs of manual and AI-driven processes to verify the accuracy of the AI before fully transitioning to AI-only. They’ve created clear processes and accountability for reviewing the biases and limitations of the AI models being used so that employees know when to trust AI outputs versus when to question them.

“You have to do your reconciliation the way you used to do earlier, and use AI and see if your answers are the same,” Rao said. “Only if your answer, the calculation is the same, then you know your AI is working properly.”

Eyes wide open. For all the talk of AI replacing humans, Rao says there still needs to be a "human in the loop" approach. For Cognizant, employees review and validate the outputs from AI before relying on them. For now, that means that reporting processes can’t be fully turned over to AI just yet.

“Basically someone needs to make sure that the number that AI is capturing is correct,” Rao said.

Truth be told

But… there’s an dishonest elephant in the room: AI just makes stuff up sometimes. These fictions are affectionately known as 'hallucinations'. Exactly (not) the kind of words an audit committee will want to hear when reassuring them that a 10-K is absolutely materially correct. In other words, financial statements and other kinds of disclosures are the last place an organization wants trippy fiction to appear.

“It [AI] can totally make up a disclosure and make it sound like it’s real,” Ally Zimmerman, associate professor of business administration at Florida State University, told us. “I think you'll still always have to check your work.”

For companies using AI to help research and write financial disclosures and statements, checking AI’s work is non-negotiable, Zimmerman added. That means human eyes making sure the numbers are real and add up.

The consequences for not doing so can be devastating.

“Companies have to be extremely careful because this is going out not only to the regulatory authorities, but also to the street, and it could be market-moving,” said Ash Mehta, research for AI in finance at Gartner. “You always have the risk of hallucinations, and misleading commentary could significantly mislead investors.”

But the day may be coming soon in which companies will follow Hewlett Packard’s lead in turning external reporting over to AI. Mehta believes pressures from boards, CEOs, and competitors should drive even the most skeptical CFOs to embrace AI in external reporting.

“This isn’t a question of whether finance should do this. It’s an imperative,” he said.

Just don’t believe everything you read on that first draft.

Reading The Room…

The questions your board will ask - beat them to it:

Data Privacy. Can you guarantee our data isn't training public models accessible to our competitors?

Review Layers. Are existing controls capable of detecting AI hallucinations, or do we need new layers?

Model Auditing. Should we use a second, distinct AI model to cross-check and audit the first?

External Audit. Does this change our risk profile with external auditors, increasing their scope or fees?

Executive Ownership. How do we ensure CXOs retain ownership and don't just rubber-stamp AI-generated drafts?

Historical Bias. How do we prevent the AI from reinforcing historical biases we are trying to change?

Continuity Risk. If the AI fails near the deadline, does the team retain manual drafting capabilities?

Boardroom Brief is presented by The Secret CFO Network

You can check out our other newsletters here.

If you found this helpful, please forward it to your fellow finance leaders (and maybe even your Board). If this was forwarded to you, you can make sure you receive the next edition by subscribing here.

A new improved Boardroom Brief will be back in February after a brief hiatus!